There are a lot of cases in the MyCoach platform where we want to do something when something else happened.

For example, when a new substitution is added to a game we might want to recompute the two player’s play time, when a new public exercise is added, modified or removed we want to update our search index accordingly.

Computing play time

If we take the example of football, the play time depends on the following data:

- The game composition (Who started the game)

- The substitutions (Who entered the game and who exited the game)

- The red cards (Who exited the game)

If we know that a player started the game and got subbed out at the 70th minute of the game, its play time will be 70 minutes, the player who entered at the 70th minute and finished the game will have a play time of 20 minutes.

For a long time we have computed the play time for each api call, each time the frontend needs to display the game data, it will call our backend and our backend will fetch the game facts in memory and compute the play time for all of the players.

What’s wrong with this approach ?

Nothing is inherently wrong, we do have performance issues when we want to see statistics across a whole season. If we need to see the average play time per player for the whole season, we will need to fetch all the game compositions, the substitutions and the red cards and compute the data for each players.

This works too, but this is not really responsive for the customer it can take up to 5 seconds for the api to return the play time. We try to keep the UI responsive by having endpoints who reply in less than 1 second (most of them will reply in less than 500ms) but our limit for complicated endpoints will be 1 second.

So what do we do ?

We can recompute the play time each time one of the game facts involved in the play time changes. If we take our preceding example, let’s say that the substitution changes from the 70th minute to the 80th minute.

When this game fact changes we need to update the play time from the first player from 70 minutes to 80 minutes, and the play time of the second from 20 minutes down to 10 minutes.

Recomputing the play time with manual events

In order to change the play time, one of the game facts must be added, removed, or deleted.

When an user updates the game facts, some API calls are made from the frontend to the backend. This is where we know that the game facts have changed and that a computation must be made.

We want to keep our logic of updating the events and recomputing the play time decoupled from the game events management logic. What we can do is push some messages to our RabbitMQ server with the informations of the game facts that have changed. Then consume those messages, compute the play time and store it.

This way when the frontend asks the backend « Give me the all the play times for this game », we can just retrieve the value from the database and send it. This is the same if the frontend asks us « Give me the average play time for all the players for the whole season ». It gets even more interesting for the second use case since we can do the average at the database level and don’t even need to fetch everything in memory and do the average in memory.

By just sending events from our API we will be able to recompute the play time when game facts changes.

The problem is, the API is not our only source capable of creating game facts.

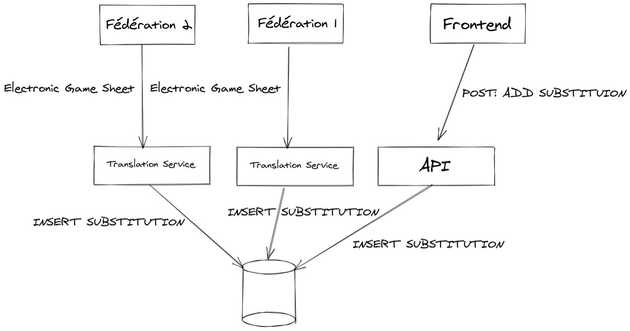

We partnered with a lot of federations, we developed services to ingest data from those federations in real-time. One of those service is called « Feuille de match informatisée » (Electronic match sheet), the federation sends us all the game facts in its own format and we translate it to our model and store it to the database.

Here’s what it looks like:

We have different services capable of creating game facts. This means that we’ll need to change all these services in order to send the proper events and make sure that play time is re-computed.

We have different services capable of creating game facts. This means that we’ll need to change all these services in order to send the proper events and make sure that play time is re-computed.

Can we do better ?

Yes we can !

Streaming database change with Change Data Capture

Introducing: Change Data Capture . CDC is a technique to capture change happening at the database level and to broadcast the changes to the outer world by streaming the corresponding events.

How can we do CDC ? Well, it depends on your database type.

For relational databases such as MySQL and PostgreSQL it’s pretty straightforward, you’ll setup your database to enable binary logging (which is used for replication) and you spin-up a service which will listen to the binary log in real time and stream events corresponding to what is happening on your database.

There are different open source tools for CDC: Debezium, Maxwell, etc.

We settled on Maxwell for its simplicity and because it supports MySQL. We needed support for NATS which is our publish/subscribe system of choice.

My mate Vu Hai and I, have developed the nats connector for maxwell, which will probably be merged to main branch when your read those lines.

The setup is simple, you juste write a configuration file and spin up a docker container and you get your changes published to your Nats subject in real time.

We configured the nats subject to the following: %{database}.%{table}.%{type}

So if se go back to our example of computing play times.

With this configuration we are able to listen to the different game facts changes and react accordingly, for play time we will listen to:

- MyCoachdb.SUBSTITUTIONS.*

- MyCoachdb.CARDS.*

- MyCoachdb.COMPOSITIONS.*

When we receive a message on one of those subjects we know that some play time needs to be re-computed for some player and some game. But how do we know which game and which player ?

That’s where maxwell is very handy, in the payload of the message we get the current state of the data (as well as the old state). Since the compositions, substitutions and the card table contains both the game and players involved, we know for which player and for which game the play time needs to be recomputed.

We just need to apply our usual algorithm, fetch the game facts for the players and the given game, compute the play time and store it.

Conclusion

We now generate events from our single source of truth, we don’t need to modify all the services to generate the events by hand. No matter if we integrate more partners in the future we don’t have to worry about sending events for game facts changes, because we are plugged to the database we’ll be notified no matter what.

We have a lot of use cases for CDC, for example in a previous post I explained how we built a distributed scheduler for training notifications. We used to send training changes notification by hand too. We removed this code and we plugged our notification service to maxwell. Thanks to the state informations available in the payload we can act on the notification by re-scheduling notifications only if the date has changed.

We also maintain an index inside a mongodb collection for our Exercises feature. This index gathers data from a lot of tables and allows us to do lookups very fast and provide the smoothest experience to the customer. Once again here we were sending events by hand to update the index when an exercise changed. Now we just need to listen to some subjects and re-index the exercise when there’s a change in one of the tables related to the exercises.